Probably the most significant news to come from the recent Shanghai World AI conference was the announcement that Chinese AI company Z.ai has developed its own AI model which is significantly cheaper than its both local rival Deepseek and its many giant US competitors, Open AI, Anthropic AI, X AI, Meta and Google. Furthermore Z.ai loudly proclaimed how it was being run on only 8 of Nvidia’s H20 chips.

Necessity—That Mother of Invention

To put this in some context, the H20 is the maximum level of chip the US allowed to be exported to China under the first Trump and Biden administrations due to national security restrictions. Since the beginning of the second Trump administration, they were subjected to additional restrictions making them illegal to export to China. A condition which was only rescinded earlier this month, leading Nvidia to order the manufacture of 300,000 new H20 chips.

In technology terms the H20 relatively speaking is much lower tech than Nvidia’s high grade GB200 which is reflected in the difference of their US retail unit price, $12-15,000 for the H20 vs $60-$70,000 for the GB200. The consequences of the imposition of a processing restriction (in this case artificially imposed due to geopolitical reasons) is interesting. The lack of access to the latest technology has meant that Chinese tech firms have had to make do and get creative by becoming more efficient.

We of course have already seen the shock impact this kind of efficiency improvement had on Nvidia’s stock price at the start of the year when Deepseek’s cheaper AI hit the scene. Z.ai’s announcement by comparison just squeaked a mention in the media and Nvidia’s stock didn’t get thumped. This lack of interest is the very reason AI investors should prick their ears and start paying attention.

There are three highly important parts to this:

Firstly, Z.ai’s GLM-4.5 model it is half the size of Deepseek’s R1 model (responsible for all the fuss in January).

Secondly it’s pricing. AI model pricing is determined by two different values based on the amount of input and the amount of output. In AI speak, this is measured in units of millions of “Tokens” a token can be considered to be roughly equivalent to 0.75 of a word. The price for the GLM-4.5 model is $0.11/M Tokens for input and $0.28/M Tokens for output. By comparison Deepseek’s R1 is $0.14/M Tokens for input and $2.19/M Tokens for output. This though pales by comparison to the upper end of the US mega players, Open AI’s GPT-4.5 for example has a cost of $75.00/M Tokens for input and $150.00/M Tokens for output.

Then thirdly there is the Z.ai’s statement that they only required eight H20 chips and that they don’t have any additional computing equipment requirement.

A Functional Plateau

AI investors need to think about the implications of this. From a benchmarking perspective, the GLM-4.5 is performing at par with its much bigger competitors, suggesting that we may be reaching what could be described as a functional plateau with large language model AI.

What do we mean by this? A functional plateau describes a situation where a technology can and will improve over time, but it reaches a point where functionally it achieves most of what is commonly useful, fairly rapidly. This doesn’t prevent further improvement, efficiency, capacity etc. but these improvements developed by the trail blazers can slowly be filtered down into the basic functional version at much less cost.

For an example think of the car. The functional plateau for a car’s speed for example is realistically the national highway speed limit (e.g. US maximum 85mph), of course most cars can well exceed that speed and have been able to do so since 1904. Engine technology has and will continue to improve over time. For example, the land speed record for a combustion engine vehicle is 448.757mph was set in 2018 by a car which was built in 1968! (for those interested, the actual land speed record for a jet engine vehicle is 763.035mph, which was set in 1997).

As one can see from a car’s speed perspective, technological advances have far outstripped the functional plateau for many decades yet in most cases the additional umph provided, has little practical function, anyone who has watched a road-going super car humbly and timidly drive over a speed bump understands this point.

So in the case of AI, within the exciting progress being made, it is vital to recognize that like all technologies it too will have a functional plateau. Currently it is unclear where exactly that will be but when a relative minion such as Z.ai can for the most part compete with a behemoth such as Open AI, it is time for investors to attempt to identify where and when they think that transition is occurring. Determining this helps define when an exponential growth phase shifts into a normal growth phase.

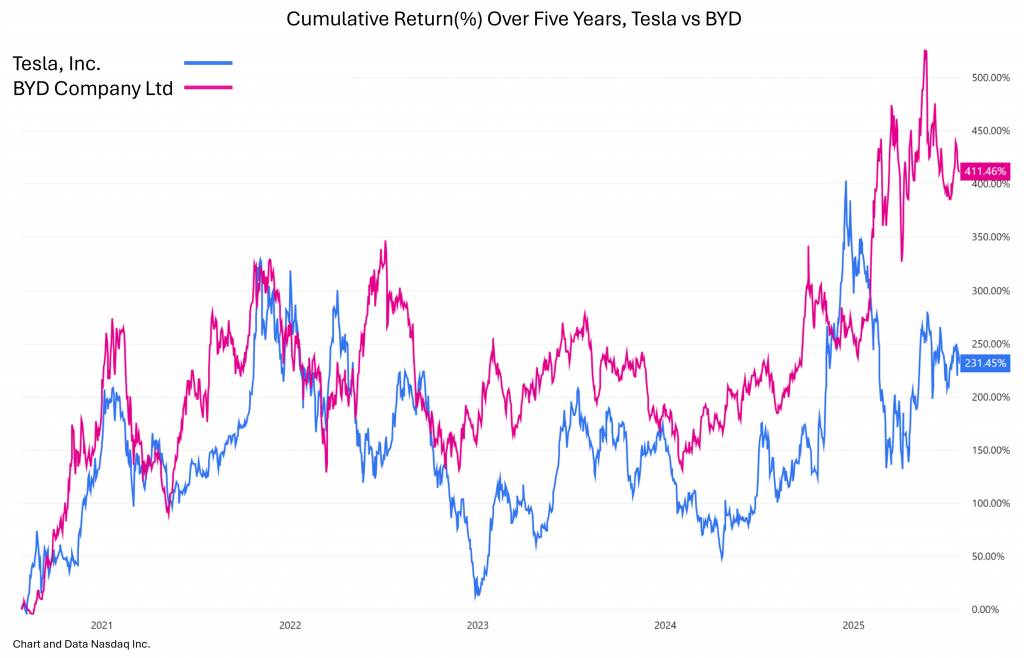

To end let us briefly look at an example which has certain parallels. The chart below shows the cumulative returns of Tesla and its Chinese rival BYD over the past five years.

The initial observation is that BYD has almost double the return of Tesla for the period. This is Tesla, post span style=”white-space:nowrap;”>its initial exponential growth, a point somewhat akin to the stage some of the major AI players are now. During these five years, BYD’s share of the global EV market went from 6% in 2020 to 22% at the end of 2024. Tesla on the other hand went from having 18% in 2020 to having 10% in 2024. This illustrates how a more basic, cheaper, functional version of technology can quietly outstrip its more flashy, more expensive pioneering rival. The question is, are we beginning to witness an equivalent currently in the AI space? ■

© 2025 Plotinus Asset Management. All rights reserved.

Unauthorized use and/or duplication of any material on this site without written permission is prohibited.

Image Credit: AI at Shutterstock.